Optimizing for Bandwidth (Chapter 10)

Get in Touch

-

To More Inquiry

(833) 888-9706

-

To More Inquiry

[email protected]

-

534 Trestle Pl, Downingtown, PA, USA

- Home

- NDI Bridge, SRT and vMix in the Cloud (Chapter 11)

NDI Bridge, SRT and vMix in the Cloud (Chapter 11)

By, Paul Richards

- 7 Aug, 2024

- 798 Views

- 0 Comment

The NDI Bridge, SRT & vMix in the Cloud

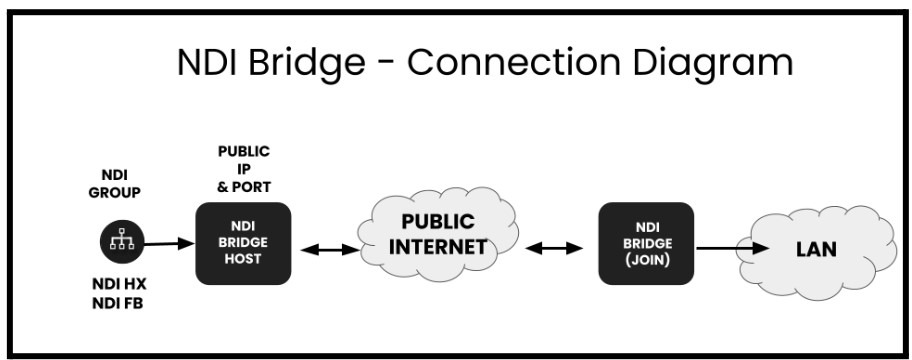

NDI Bridge is a tool designed for sharing NDI video sources beyond a local area network (LAN) using the Wide Area Network (WAN), also known as the “Public Internet.” NDI Bridge was released in 2021 with the NDI 5.0 toolset, along with NDI Remote and Audio Direct tools. Until NDI Bridge was released, many video productions used NDI only for LAN video traffic and relied on technologies such as Secure Reliable Transport (SRT) or video communication solutions like Zoom to transport video over the public internet. NDI 5.0 supports a technology called Reliable User Datagram Protocol (RUDP) which is a point-to-point video transport protocol that allows for high quality video transport over public networks.

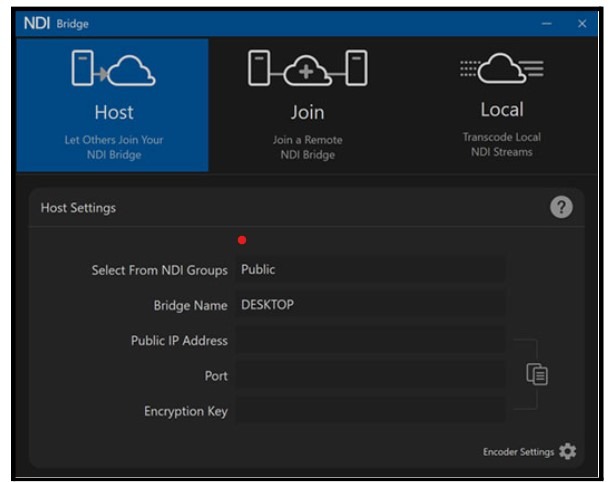

The NDI Bridge has three main components that allow users to advertise and transport NDI video over the WAN. First, users can set up their Host connection. The Host connection allows others to join the NDI Bridge that is set-up on one side of the connection. Here, you can select a group of NDI sources to be transported over the WAN to a receiving location anywhere in the world. You will learn more about setting up groups of NDI sources with Access Manager in the next chapter. Using NDI Groups, the NDI Bridge can send an entire group of NDI HB or NDI HX video sources together over the public internet.

NDI Bridge does require a public IP address and an open port to operate properly. You can request a public IP address through your Internet Service Provider (ISP). Or, you can use a dynamic DNS provider like No-IP to use a hostname instead. Ports for video traffic can be created through the router connected to the WAN. Once the public IP address and open port are set up, a host connection can be accessed through the public internet.

From the far end of an NDI Bridge connection, the same outside IP address and port number information is necessary to send video. Once both ends of the NDI Bridge are connected, the NDI sources available on the host side will be made available for the far end side to use just like local NDI sources.

NDI Bridge supports NDI video source capabilities including alpha channel, PTZ controls, KVM, Tally, and Metadata. Alpha channel support is necessary for many broadcast graphics applications. Graphics overlays are a use case for NDI Bridge used with alpha channel video. Alpha channel video supports a transparent background that overlays on top of another video source. In this way, NDI Bridge allows remote productions to bring alpha channel graphics into a production environment from anywhere in the world.

PTZ camera controls open up another interesting use case in which NDI Bridge is used to send video from a PTZ camera where the PTZ cameras can be controlled from a remote location. KVM, a popular abbreviation for “Keyboard, Video, and Mouse” can be used to pass along remote controls for computer screens captured with KVM support.

NDI Bridge will also maintain support for Tally, the technology that alerts camera operators and on-screen talent when a camera is in use. This is an interesting feature which would allow a remote production to know when a specific NDI video source is being used even from a remote production. The Tally feature of NDI will be discussed in more detail in Chapter 19. Finally, some metadata is also transported over the NDI Bridge which includes information such as NDI source-friendly names. Metadata makes NDI video more usable by providing information that compatible NDI systems can use to display relevant information to producers working with the video.

While NDI Bridge does have some technical requirements before it can work, it provides powerful connectivity options. In comparison to established wide area network (WAN) video transport solutions like Secure Reliable Transport (SRT), NDI simplifies set-up by requiring a single port to support multiple video channels. NDI Bridge provides the opportunity for many productions to think outside of their own local area networks (LAN) to implement video projects that incorporate video from around the world.

NDI Bridge in Virtual Environments

Using NDI Bridge in a Virtual Machine (VM) offers a host of benefits for producing high-quality live events, particularly in optimizing resource efficiency, enhancing flexibility, improving performance, providing redundancy, and ensuring both security and ease of maintenance. By running NDI Bridge in a VM, precise allocation of necessary resources is enabled, freeing up additional resources for other tasks and optimizing overall hardware utilization.

VMs significantly enhance the flexibility of managing software configurations. They facilitate easy switching between setups and simplify the process of creating backups and replicating systems—key advantages in dynamic production environments. When VMs support Graphics Processing Unit (GPU) passthrough, this feature can dramatically improve the performance of NDI Bridge by harnessing the GPU’s capabilities for intensive video processing tasks such as encoding and decoding, which are crucial for real-time streaming.

Furthermore, operating NDI Bridge within a VM environment enables the maintenance of multiple instances, providing critical redundancy and ensuring backup instances are ready to deploy in case of system failure, thus enhancing the reliability of live productions. VMs also bolster the overall stability and security of the operating environment; by isolating NDI Bridge within a VM, any potential crashes or errors are confined to the VM itself, minimizing the risk to the entire system. Security issues within the VM are similarly contained.

In terms of maintenance, VMs make it easier to isolate problems and perform system troubleshooting. Should issues arise with NDI Bridge, the system can be quickly restored to a previous state, or a new VM can be set up without affecting the main operating system.

However, it’s important to acknowledge the potential drawbacks. Running NDI Bridge in a VM introduces additional complexity and overhead, and setting up GPU passthrough might be challenging depending on the specific hardware and software environment. These factors should be carefully weighed against the needs and constraints of the specific production environment to determine if using NDI Bridge in a VM is the most suitable approach.

Understanding SRT

SRT is a video transport protocol designed to send high quality video over the public internet. SRT stands for Secure, Reliable, Transport. SRT can be used with many popular video production solutions including OBS, Wirecast, and vMix. In fact, there are over 450 members in the SRT alliance. SRT is used by video producers small and large to enable remote productions from all around the world.

Unlike NDI, which is designed for local area networks, SRT was designed for use over the public internet. This is done partially by managing a fixed amount of latency for each video stream. SRT video connections provide broadcast studios remote access to high definition video and audio that is usable for video production. For example, SRT is an ideal way to send video from reporters in the field who make remote video contributions. Broadcast studios can then receive that video in a way that it can be mixed into a news production.

SRT has made a name for itself by providing encryption that ensures secure transport of even the highest level production. SRT can enable end-to-end AES encryption which is ideal for any content that requires protection. SRT protects against video jitter and packet loss even during bandwidth fluctuations from unreliable WiFi or cellular connections.

As SRT hardware and software has become more affordable, everyday productions done at schools, churches and state/local government agencies are using the solution. While SRT is used by the world’s largest live production companies including Fox Sports, Comcast, and the NFL, the solution is open source and used by thousands of independent broadcasters.

SRT is helping to enable remote productions around the world, and there are a few things you should know to get started using SRT.

First of all, SRT uses the public internet. Therefore, you can either set up a peer-to-peer connection or you can use a proxy server to connect. A peer-to-peer connection will require a little networking knowledge. For example, you will need to know your public IP address (or dynamic DNS hostname), make sure your router is set up with port forwarding, and configure your video production software to receive the stream. There are great tutorials you can watch to step through this process, like this one on vMix’s YouTube channel. The second easier way to get set up using SRT is to use a SRTMiniServer in combination with its proxy server capabilities. This application which costs $30 per month, will receive SRT video feeds and convert them into NDI which you can easily use with OBS, vMix, Wirecast and more.

You should know that SRT is not ideal for two-way communications like Zoom or Skype. Rather, SRT is ideal for broadcasting in one-way communication scenarios like remote reporting and connecting video streams to a remote broadcast studio. One of our favorite use cases for SRT is remote production with a couple of 5G connected cell phones. The Urbanist recently produced a multi-camera tour of NYC using the Lorax Broadcaster App on iPhone 12 cameras. These cameras were able to send 2-3 Mbps quality video streams back to a production PC which was then incorporated broadcast elements such as live chat, graphics, overlays and more.

Another great example of SRT, is connecting two video production systems together. For example, consider a trade show happening where a live stream is going on. A company can easily set up a few cameras on site, but they don’t need to bring an entire video production system with them on site. Instead, they can send each video and audio stream from the tradeshow floor back to their video production studio for broadcasting out to the world.

Note: When using the hardware encoding option in a software like vMix you can utilize an NVIDIA graphics card to decode SRT video streams. When doing so, you may need to consider how many encoding channels are being utilized on your graphics card for use simultaneously. If your graphics card has a limited number of simultaneous encoding channels you may need to use your CPU to decode SRT. Most Geforce NVIDIA graphics cards are limited to two hardware encodes per system. So if you are using hardware encoding for your recording and streaming you cannot also not use it for SRT with a Geforce graphics card. If you have a P2000 or higher end Quadro Card you can have an unlimited number of encodes. Quadro graphics cards are only limited by the capabilities of the particular card.

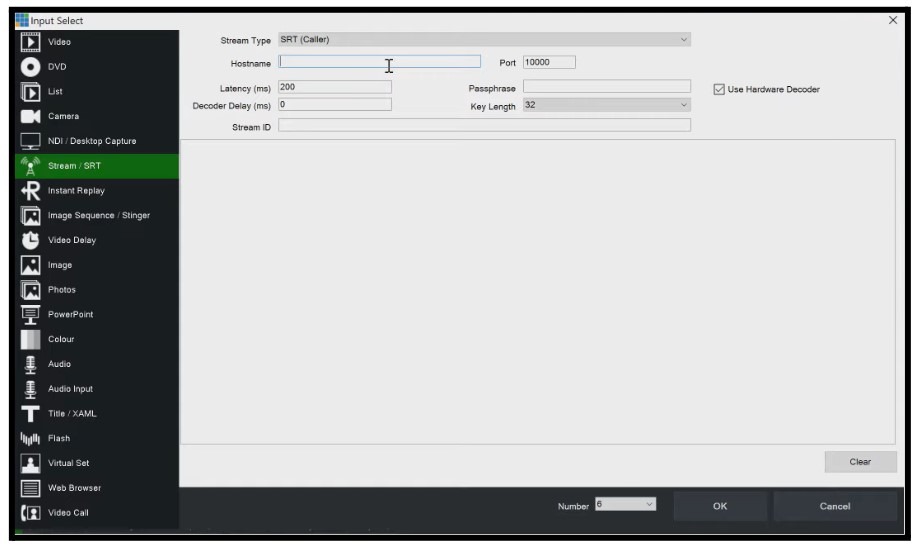

SRT & vMix

SRT has become a preferred method of video transport when sending video to a cloud production server. vMix supports SRT ingestion and the software is particularly good with pulling SRT video together for remote production. vMix supports SRT video as both an input and an output, allowing for flexibility in many complex productions. You can also use SRT video inputs with the vMix MultiCorder allowing you to record each incoming SRT stream independently for post-production.

Running vMix in the cloud involves setting up a Windows server instance with specific requirements, including a directly attached graphics card with virtual display support, typically provided under technologies like NVIDIA Grid. This setup ensures that vMix can leverage the powerful graphics processing capabilities needed for high-quality live video production. Cloud deployment of vMix is ideal for users needing flexible, high-performance broadcasting capabilities without the physical constraints of traditional hardware setups. However, it’s crucial to test the cloud setup thoroughly to ensure it meets the demands of live production, especially since performance can vary due to the shared nature of cloud services.

You can learn more about running Vmix in the cloud in Chapter 10, Advanced Topics in Remote Production.

KEY TAKEAWAYS FROM THIS CHAPTER:

- NDI Bridge Enhances Remote Production: Released as part of the NDI 5.0 toolset in 2021, NDI Bridge allows the extension of NDI video sources beyond a local area network using the public internet. This technology uses Reliable User Datagram Protocol (RUDP) to facilitate high-quality video transport over wide area networks (WAN), effectively connecting distant production sites.

- Technical Setup for NDI Bridge: For effective use, NDI Bridge requires a public IP address or dynamic DNS hostname and an open port, allowing video traffic through the internet. Users can set up a host connection, select NDI sources to be shared over the WAN, and establish secure and efficient video source transportation to any global location.

- Advanced Features Supported by NDI Bridge: NDI Bridge supports comprehensive video production capabilities including alpha channel for broadcast graphics, PTZ camera controls, KVM for remote screen control, Tally for on-air source indication, and metadata transport. These features enable a broad range of remote production scenarios.

- Utilization in Virtual Environments: NDI Bridge can be operated within a virtual machine (VM) to enhance resource efficiency, flexibility, performance, security, and ease of maintenance. VMs allow for precise resource allocation and simplify the process of system backup and recovery, making NDI Bridge more robust in dynamic production environments.

- SRT and vMix Integration for Cloud-Based Production: Secure Reliable Transport (SRT) has become a preferred method for transmitting video to cloud production servers, with vMix offering robust support for SRT ingestion. This integration allows vMix to handle SRT video both as an input and output, adding substantial flexibility to complex productions. Additionally, vMix can run in a cloud environment on a Windows server with a high-performance graphics card, making it a versatile option for remote broadcasting where physical hardware limitations are a constraint. This setup is particularly effective for productions that require high-quality live video processing capabilities, though it is essential to thoroughly test the cloud deployment to ensure optimal performance during live productions.

Remote Production Workflows and Team Roles (Chatper 12)

Recent Comments

Category

- Audio (1)

- AV Management (2)

- Book (18)

- Education (1)

- NAB Show (1)

- NDI (4)

- PTZ Controls (6)

- Remote Production Software (20)

- Sports (3)

- Studio Management (2)

- Training (7)

- Uncategorized (4)

- vMix (3)

New Tags

auto-tracking AV Management AV Monitoring bandwidth Book broadcasting broadcast technology camera compatibility camera presets camera settings cloud-based platform CloudFlex color correction Education Epiphan Hive Hive PTZ Hive Setup innovative technology IP Video live production live streaming metro NAB Show NAB Show 2024 NDI NDI Video Source Peplink professional broadcasting professional video PTZ camera control PTZ Controls PTZOptics PTZOptics Hive real-time collaboration remote production remote video production Sports Sports Broadcasting USB Cameras video broadcasting video content production video equipment video production vmix