Challenges and Solutions in Remote Production

Get in Touch

-

To More Inquiry

(833) 888-9706

-

To More Inquiry

[email protected]

-

534 Trestle Pl, Downingtown, PA, USA

- Home

- Advanced Topics

Advanced Topics

By, Paul Richards

- 9 Aug, 2024

- 383 Views

- 0 Comment

Remote production has become increasingly prevalent in the media and broadcast industry, driven by advancements in technology and the need for more flexible content creation methodologies. While this shift offers numerous benefits, such as cost reduction and the ability to produce content from virtually anywhere, it also introduces a set of unique challenges that can impact the quality and efficiency of productions. Understanding these challenges is crucial for developing effective strategies to overcome them and ensure successful remote production operations.

More on SMPTE 2110

SMPTE 2110 is designed for high-end, large-scale productions and provides superior video quality. It separates video, audio, and ancillary data into different streams for easier management and supports PTP synchronization, ensuring precise timing across devices.

Choosing Between NDI and SMPTE 2110

The choice between NDI and SMPTE 2110 depends on specific production requirements, resources, and scale. NDI is ideal for productions where simplicity and cost are critical, making it a good choice for smaller production houses or those new to IP-based workflows. SMPTE 2110 is suited for environments that demand the highest quality and control, typical in professional broadcasting and large-scale events.

Many organizations may find benefits in using both standards, leveraging NDI for simpler tasks and SMPTE 2110 for projects requiring high fidelity and precise synchronization. Both NDI and SMPTE 2110 offer valuable features tailored to different needs within the video production industry. By understanding the capabilities and limitations of each, you can choose the most appropriate technology or combination of technologies to meet the specific demands of your projects.

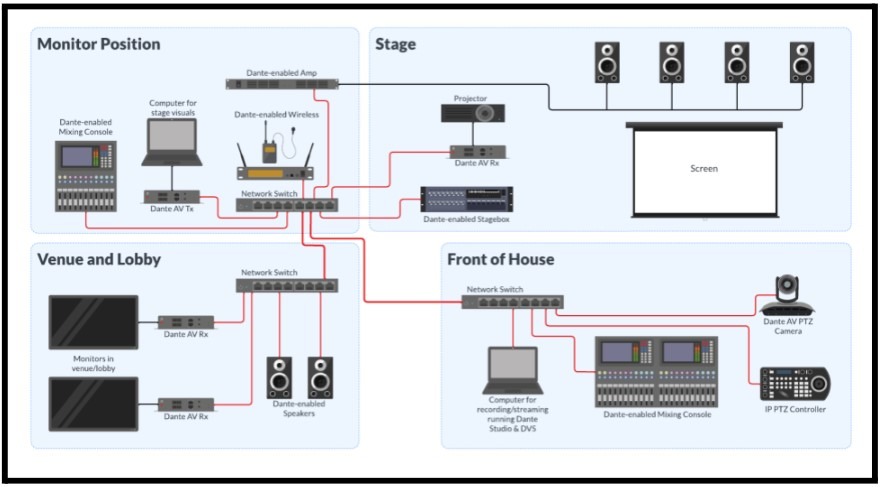

More on Dante AV

Dante supports several video standards for flexible use cases including Dante AV Ultra, Dante AV-A and Dante AV-H. The Dante Controller is used for configuring and routing both audio and video devices with ease. Dante’s solutions can operate on standard 1 GbE network infrastructure. That being said, each Dante video source will require additional bandwidth as you add them to your network. For example, each Dante AV Ultra source requires 700 Mbps while Dante AV-H requires only 30 Mbps.

Feature | Dante AV Ultra | Dante AV-A | Dante AV-H |

Resolution Support | Up to 4Kp60 4:4:4 | Up to 4Kp60 4:4:4 | Up to 4Kp60 4:2:0 |

Latency | Sub Frame | Sub Frame | A Few Frames |

Interoperability of products using the same codec | Yes | Yes | Yes |

Dante Controller Support | Yes | Yes | Yes |

Dante Domain Manager and Dante Director Support | Yes | No | Yes |

Dante Studio Software Support | Yes | No | Yes |

HDCP Support | Yes | Yes | No |

Common AV Clock | Yes | No | No |

Encode/Decode from SDI | Yes | No | No |

Several important Dante tools include Dante Studio, which can convert Dante video into a virtual webcam, making it easy to incorporate video flows into various applications and platforms. Users can integrate Dante enabled PTZ cameras and sources into video meeting platforms such as Teams and Zoom, capture video content for CMS platforms like Panopto, and add video streaming or recording to live events using software like OBS or vMix. This expansion into video provides simplified system maintenance and security is managed through Dante Domain Manager and Dante Director.

Advanced IP Audio Tools for Remote Production:

AES67 is an important standard in the world of networked audio, and its implementation in remote productions plays a significant role in enhancing audio management across various broadcast scenarios. AES67 is a standard for audio over IP and interoperability between different networked audio systems, which allows for high-quality audio streaming over IP networks. Here’s a detailed look at AES67 and how it integrates into remote production environments:

What is AES67?

AES67 is an open standard for audio over IP and interoperability among various proprietary standards. It was developed by the Audio Engineering Society (AES) to enable high-performance audio networking between devices and systems that previously could not communicate due to differing underlying technologies. AES67 focuses on ensuring that various audio-over-IP systems can share audio streams across a network, even if they are built on different protocols such as Dante, Ravenna, or Q-LAN.

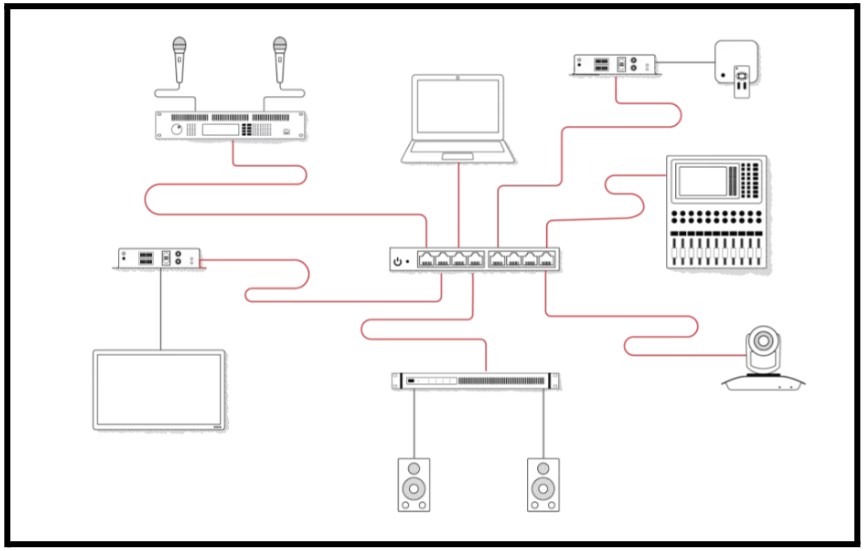

Dante for Remote Audio Production

Dante (Digital Audio Network Through Ethernet) is a network audio protocol that allows for multiple uncompressed audio channels to be transmitted across a standard Ethernet network. It is highly valued in remote productions for its low-latency, high-resolution audio transfer capabilities. Dante enables complex audio setups to be simplified through a virtual mixing environment, where audio from various remote locations can be managed and mixed without physical proximity constraints. Its ability to integrate seamlessly with existing network infrastructures makes it an optimal solution for live event productions, broadcast environments, and multi-site venues.

Dante Connect enhances remote production by delivering synchronized audio from on-site Dante networks directly to cloud services. This integration allows for a more efficient use of personnel and hardware on the ground. By centralizing audio production in the cloud, broadcasters can significantly reduce costs and streamline their workflows. The use of Dante-enabled AV-over-IP devices ensures reliable and high-quality audio transmission, making Dante Connect an ideal solution for modern broadcasting environments that require flexibility and scalability.

Dante Director is a cloud-based software as a service (SaaS) application that facilitates the organization and management of Dante devices into logical groups. It allows for enhanced user access management, device security, and remote management of one or more Dante networks. This tool is crucial for maintaining control over complex audio setups across distributed locations.

Dante Controller routes AV signals between all Dante-enabled devices on a network. It saves configurations directly to the devices, ensuring network stability through power cycles, device disconnections, and system reconfigurations. This robust management tool is essential for maintaining seamless audio flows and ensuring high reliability in live production settings.

Bridging Dante and AES67:

Dante and AES67 can work in tandem, leveraging Dante’s robust network management and low-latency capabilities alongside AES67’s emphasis on interoperability across different audio networking platforms. This combination enables a more flexible and comprehensive audio networking system.

- Use AES67 as an interoperability tool: AES67 can serve as a bridge in a Dante-dominated environment, allowing devices that are primarily configured for other protocols to communicate and integrate seamlessly with Dante-enabled devices.

- Configure network settings appropriately: When integrating Dante and AES67, ensure that the network settings, such as synchronization and audio sample rates, are aligned across devices to maintain audio integrity and minimize latency.

- Employ Dante Controller for management: utilize Dante Controller to manage Dante devices and configure AES67 streams within the Dante network. This approach harnesses Dante’s ease of configuration and network stability while accommodating AES67 devices.

By understanding and deploying both Dante and AES67 according to their strengths—Dante for its network management and latency advantages, and AES67 for its interoperability—you can create a versatile and efficient audio network that accommodates a wide range of devices and protocols. This strategy ultimately enhances the capability and flexibility of audio networking systems in various professional settings.

Network Device Interface (NDI) for Audio:

For audio, NDI supports multi-channel, low-latency digital audio which can be routed independently of video, giving broadcasters flexible control over audio streams. This capability is crucial for remote productions where audio sources may not be physically close to the video sources or the production switcher. NDI Bridge can be used to send audio and video over the WAN from one LAN to another — as though they were on a single local area network.

NDI Audio Direct consists of a series of plugins that enable audio software applications to fully integrate with NDI-enabled networks. It allows these applications to send, receive, and manage multichannel audio streams with high fidelity and minimal latency. This capability is crucial for modern production environments where seamless audio integration across diverse software and hardware ecosystems is necessary.

More on Remote Audio Production

SourceConnect remains a favorite among audio professionals, known for its ultra-low latency streaming and ability to record and monitor simultaneously. It works with any digital audio workstation (DAW) and allows for multi-track recording across multiple time zones, making it ideal for high-quality, synchronous audio workflows. Audiomovers Listento is another powerful tool, offering high-resolution audio (32 bit/96 kHz) and support for multiple audio channels, including mono, stereo, quad, and surround sound. Its built-in recorder and user-selectable latencies make it perfect for ADR (automatic dialogue replacement) sessions that demand detailed audio production.

Source-Connect operates on a license basis, available either through purchase or monthly subscription, and it’s considered the industry standard compared to alternatives like ipDTL, CleanFeed, and Zencastr. The standard version of Source-Connect meets the needs of most voice actors, though other paid and a free version, Source-Connect Now, are available. However, professional use typically requires a paid version as stipulated by agencies and clients.

SonoBus is an innovative application designed to streamline the process of streaming high-quality, low-latency audio between devices, whether over the internet or on a local network. This versatile tool stands out due to its ease of use and robust functionality, making it an excellent choice for audio professionals, musicians, and anyone needing to manage audio across different devices and platforms.

One of the most significant advantages of SonoBus is its ability to deliver peer-to-peer audio streaming with minimal latency. This feature is particularly valuable for live performances, rehearsals, and remote recording sessions, where real-time collaboration is essential, and delays can disrupt the flow of interaction.

SonoBus supports uncompressed PCM audio, ensuring that the audio quality remains pristine and lossless throughout the transmission. This capability is crucial for audio professionals and enthusiasts who require the highest fidelity in their projects.

The application interface takes some getting used to but it’s ultimately well laid out based on the features it offers. Users can easily connect multiple devices across different operating systems, including Mac, Windows, iOS, and Android. This cross-platform compatibility can be useful if you are remotely producing audio and would like to connect additional sources to the mix.

SonoBus is completely free, making it an accessible option for individuals and organizations who might otherwise be unable to afford similar high-quality audio streaming solutions. SonoBus users can stream audio directly into most digital audio workstation (DAW) software.

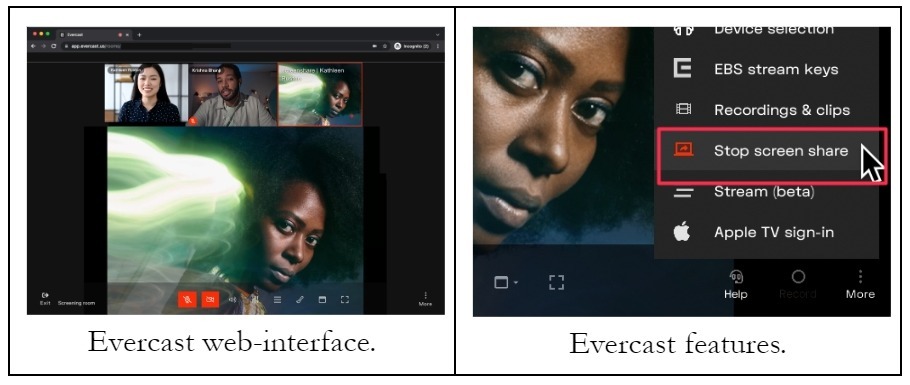

Evercast is a real-time collaboration platform designed specifically for creatives involved in video production. It integrates video conferencing, HD live-streaming, and high-quality audio into a single web-based platform, making it convenient for various stages of production. The platform allows teams to share their creative workflows with ultra-low latency and high-quality output. This includes live cameras on set, as well as software like Avid, Premiere, Maya, and Pro Tools. Evercast’s design focuses on enhancing creative collaboration, simulating an environment where team members are working together in the same space.

Bringing together remote audio and video sources, enable professionals to conduct live shows, recording sessions, and collaborative projects with high efficiency and quality. In an upcoming chapter, you will learn about hardware audio mixers that support remote mixing of physical XLR and ¼” audio inputs. Understanding your software and hardware options for remote audio production will allow you to choose the best solution for your next project.

Video Switchers in the Cloud

Live video switching is integral to remote production, enabling directors to select and switch between different video feeds in real time. Switchers can be either hardware-based or software-based. Software video switchings are increasingly utilized in remote production for their flexibility and scalability. The adoption of software-based switchers is particularly advantageous in remote settings where you want to leverage the cloud.

Earlier in the book, V2Cloud was mentioned as an easy way to deploy OBS software in the cloud. You can also deploy vMix in the cloud as well following vMix’s published guidelines. To virtualize vMix and other similar software in the cloud effectively you can follow the following steps.

vMix Cloud Setup Requirements:

- Server Instance: Utilize a Windows Server 2019 x64, allocating at least 4 CPU cores to handle the demands of live production.

- Graphics and Display: It’s crucial to have directly attached graphics with virtual display support, often available via technologies like NVIDIA Grid or NVIDIA Workstation Graphics. For Amazon EC2, instances labeled “G4” or higher are suitable.

- Drivers: Only NVIDIA Grid drivers are compatible, and they must be installed as per Amazon EC2’s guidance. Regular NVIDIA drivers are insufficient for this setup.

- Remote Access: Avoid using Remote Desktop (RDP) for managing vMix. Options like VNC, Splashtop, TeamViewer and AnyDesk, or cloud-based desktop solutions like Teradici (which might involve additional costs) are recommended for optimal performance.

- Display Configuration: Ensure the graphics card-connected display is set as the primary within Windows settings. All other monitors, especially those connected to a “Microsoft Basic Adapter,” should be disabled to prevent conflicts.

Testing the Setup:

– Launch vMix and add an MP4 video file as input to check the graphics configuration. Proper display of the MP4 file indicates a successful setup, whereas issues in displaying the file could signal a misconfiguration in accessing the graphics card.

Benefits of Virtualizing vMix in the Cloud:

- Scalability and Flexibility: Cloud instances can be scaled up or down based on the production requirements, providing flexibility in resource management.

- Accessibility: Producers and directors can access the production setup from anywhere, reducing the need for physical infrastructure.

- Cost Efficiency: Reduces the overhead costs associated with physical hardware and minimizes the maintenance expenses.

- Reliability: Cloud platforms often offer high uptime and reliability compared to physical servers, which is critical for live production environments.

It’s crucial to thoroughly test the entire setup in a cloud environment to ensure that it meets the demands of your production, especially since cloud services can be shared and might not always guarantee consistent performance. This setup process, when properly managed, can significantly enhance the flexibility and scalability of remote video production workflows.

WHIP (WebRTC-HTTP Ingest Protocol) and WebRTC (Web Real-Time Communication)

The integration of WHIP and WebRTC into remote production setups brings a host of benefits, particularly by addressing the long-standing issue of latency in live broadcasts. WHIP (WebRTC-HTTP Ingest Protocol) and WebRTC (Web Real-Time Communication) technologies allow for the delivery of audio and video content in real-time with minimal delay. This is crucial in remote production where the synchronicity and immediacy of feeds directly influence the quality of interaction and engagement during live events.

For producers and broadcasters, the ability to stream high-quality content with low latency means improved coordination among production teams, more dynamic interactions with live audiences, and the capacity to manage multiple feeds smoothly without the traditional delays that can detract from the viewing experience. These technologies not only enhance the viewer’s experience by providing smoother, more immediate content but also empower producers to execute more complex and interactive live events.

Furthermore, the availability of these technologies in OBS Studio 30.0, a leading free and open-source software for video recording and live streaming, democratizes high-quality production capabilities. With OBS 30, users now have access to WHIP and WebRTC support, allowing them to experiment with and deploy these advanced technologies in their productions at no additional cost. This inclusion in OBS 30 opens up possibilities for creators of all levels to explore innovative broadcasting techniques and improve their production workflows using the latest advancements in streaming technology.

The Future of Broadcasting

Recent Comments

Category

- Audio (1)

- AV Management (2)

- Book (18)

- Education (1)

- NAB Show (1)

- NDI (4)

- PTZ Controls (4)

- Remote Production Software (18)

- Sports (1)

- Studio Management (2)

- Training (7)

- Uncategorized (4)

- vMix (3)

New Tags

auto-tracking AV Management AV Monitoring bandwidth Book broadcasting broadcast technology camera compatibility camera presets camera settings cloud-based platform CloudFlex color correction Education Epiphan Hive Hive PTZ Hive Setup innovative technology IP Video live production live streaming metro NAB Show NAB Show 2024 NDI NDI Video Source Peplink professional broadcasting professional video PTZ camera control PTZ Controls PTZOptics PTZOptics Hive real-time collaboration remote production remote video production Sports Sports Broadcasting USB Cameras video broadcasting video content production video equipment video production vmix